Teaching Your AI Scribe to See: What I Learned in Three Shifts

Less time documenting. More mental bandwidth for patient care. After three shifts with an AI scribe, I've experienced a fundamental shift in practice. This guide shares practical communication strategies that don't just improve charting—they make you a better physician.

Working with an AI scribe is like working with a highly competent human scribe who can't see.

My Early Experience

I spent yesterday morning in PIT with a third-year resident. Over the three hours, I saw 8 to 10 patients while supervising the senior resident, who was managing their own patients. By the time I walked back to the main emergency department, all of my notes were done.

That's not something that would have happened before we started using AI scribes.

This was only my third shift using our ambient scribe tool. But I've been using AI daily for the past couple of years, for writing, research, and workflow optimization. More importantly, I've spent significant time with dictation software like Superwhisper, learning how to structure my speech so AI can process it effectively. That experience translated directly to clinical documentation once I understood what was happening.

The shift in my practice has been profound. It's not just documentation efficiency; it's the mental space that opens up when you're not carrying that burden through your shift. I'm much more likely to pick up that extra patient in the last hour now. The one who needs sutures or has straightforward cellulitis. Before, I'd hesitate because it meant staying late to chart or worse, charting at home while my family went to the park. Now the note is done by the time I walk out of the room.

A few of us have noticed this mindset shift. We're more willing to distribute workload because the barrier to seeing "one more patient" has dropped. That's better care for patients, better for department flow, and better for our collective well-being.

But this technology requires you to adapt your communication.

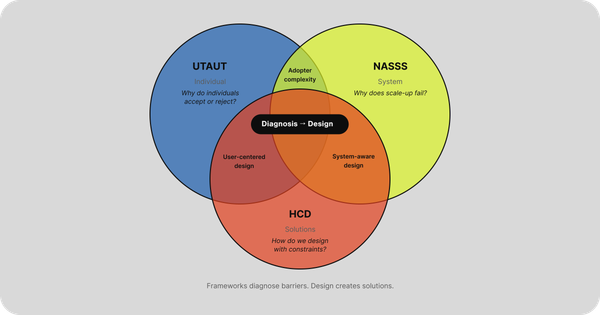

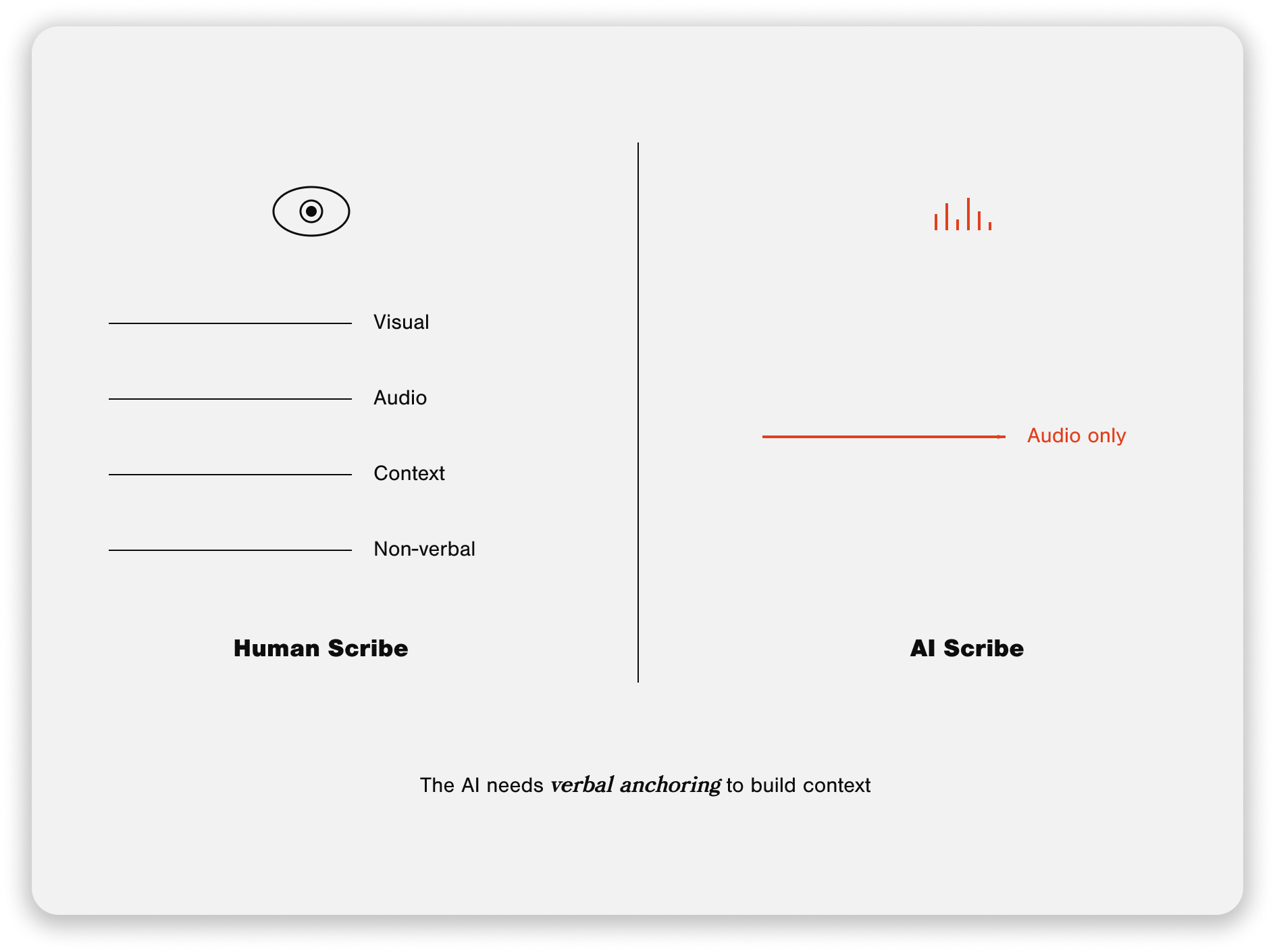

The key insight: Working with an AI scribe is like working with a highly competent human scribe who can't see.

A human scribe constantly picks up visual cues. They see what you're examining, watch you review labs on the screen, and observe the patient's non-verbal responses. They build context through multiple channels.

The AI only has audio. It only knows what it hears.

That's not a limitation to work around; it's a constraint to lean into. Once I understood this, everything clicked. The AI isn't failing when it misplaces information or produces thin documentation. I'm failing to give it the verbal anchors it needs to organize what it's hearing.

This guide shares what I've learned translating my AI experience to clinical documentation. It's structured around three phases that map to how we naturally move through ED encounters. My goal: help you get the same benefits I've experienced—less documentation burden, more mental bandwidth, and greater willingness to see patients throughout your shift.

The technology will evolve. These principles will remain relevant.

How AI Scribes Work (Why Your Communication Matters)

Imagine you're training a new human scribe. They're intelligent, detail-oriented, and adept at processing information quickly. But they're blind.

You'd adjust how you communicate, right? You'd verbalize things you'd typically gesture toward. You'd say "I'm now examining the patient's abdomen" rather than silently palpating while the scribe watches. You'd explicitly state "looking at yesterday's chest X-ray" rather than just pulling it up on the screen.

That's essentially what you're doing with an AI scribe.

The difference between human and AI scribes comes down to how they receive information. A human scribe operates with multiple input channels - they hear what you say and see what's happening. They watch you move from reviewing the chart to entering the room. They observe you examining the patient. They see you pull up labs on the computer. All of this context helps them organize the information they're documenting.

An AI scribe has only one input channel: audio. It's processing a continuous stream of sound and trying to make sense of it. Without visual context, it relies entirely on what it hears to understand what's happening and where information belongs.

Communication theorists call this the difference between multimodal and unimodal communication. When you work with a human scribe, you're building what's called "common ground"–mutual understanding that develops through shared context. You both see the same things, experience the same moments, and build shared reference points.

With AI, you have to build that shared mental model verbally. You have to create the context through your words.

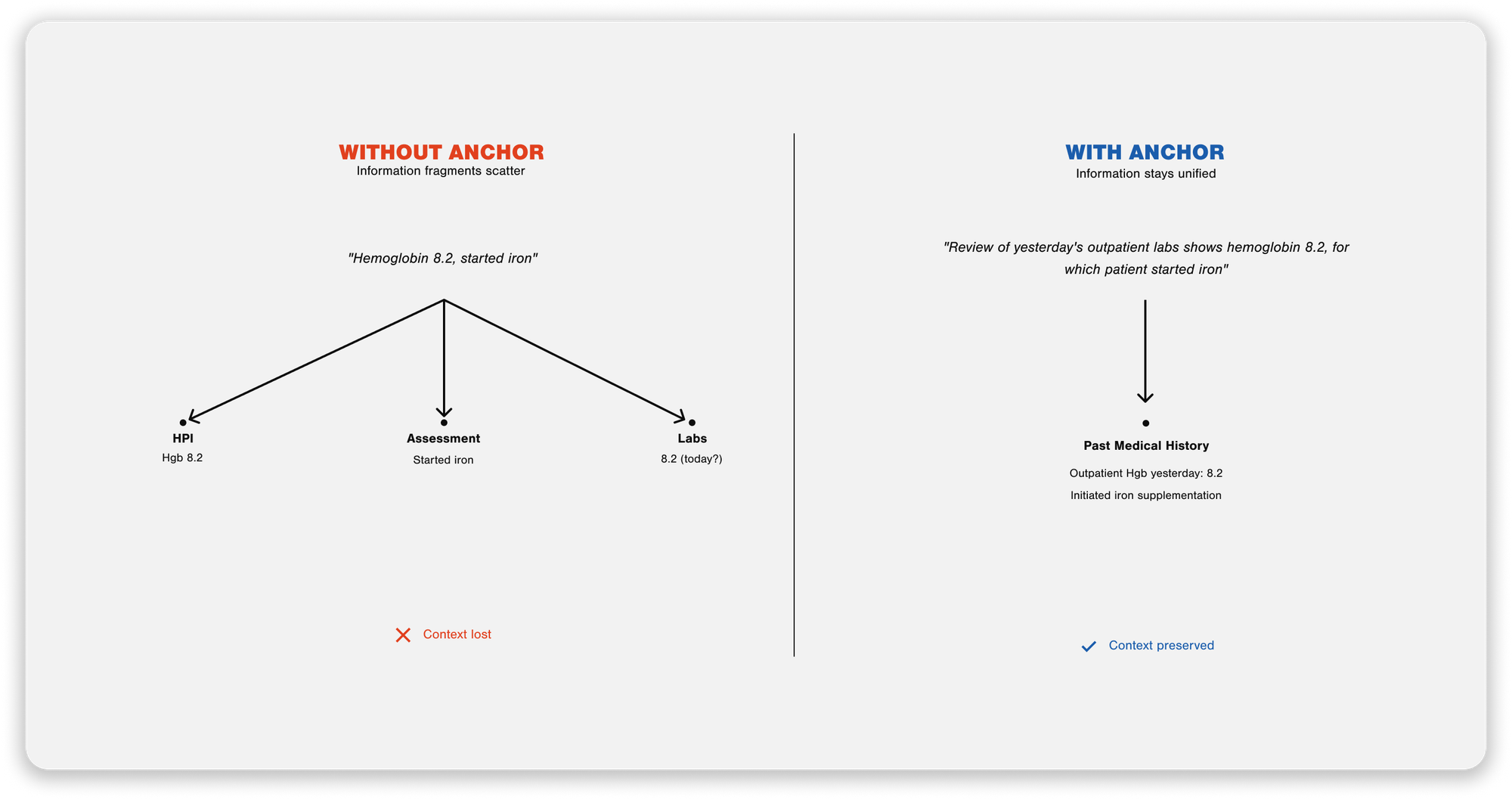

Here's a concrete example of where this breaks down:

You're reviewing a patient's chart before seeing them. You're looking at labs from their outpatient visit yesterday. You say aloud, "Hmm, hemoglobin is 8.2."

A human scribe knows you're reviewing old labs because they see you at the computer, see you clicking through prior visits. The AI just heard "hemoglobin is 8.2" and has no idea when that lab was drawn or why you're discussing it. Without additional context, it might place that information in today's assessment or incorrectly weave it into the present illness.

But if you say, "On review of the patient's outpatient labs from yesterday, their hemoglobin was 8.2", now the AI has the context it needs.

This is what I mean by verbal anchoring. You're providing explicit markers that help the AI understand:

- What you're doing (reviewing prior labs vs examining the patient vs discussing treatment)

- When things happened (yesterday vs today vs two weeks ago)

- Where information belongs (HPI vs physical exam vs assessment)

The constraint is real. The AI can't see. But here's what I've learned: leaning into this constraint actually makes you a better communicator. When you're explicit about temporal sequences with patients, you catch important details you might have missed. When you think aloud about your differential diagnosis, you're educating your patient and improving your documentation. When you systematically dictate your physical exam, you're less likely to forget findings.

You're not working around the AI's limitations. You're adapting your communication to improve patient care.

The following section breaks down exactly how to do this across the three phases of an ED encounter.

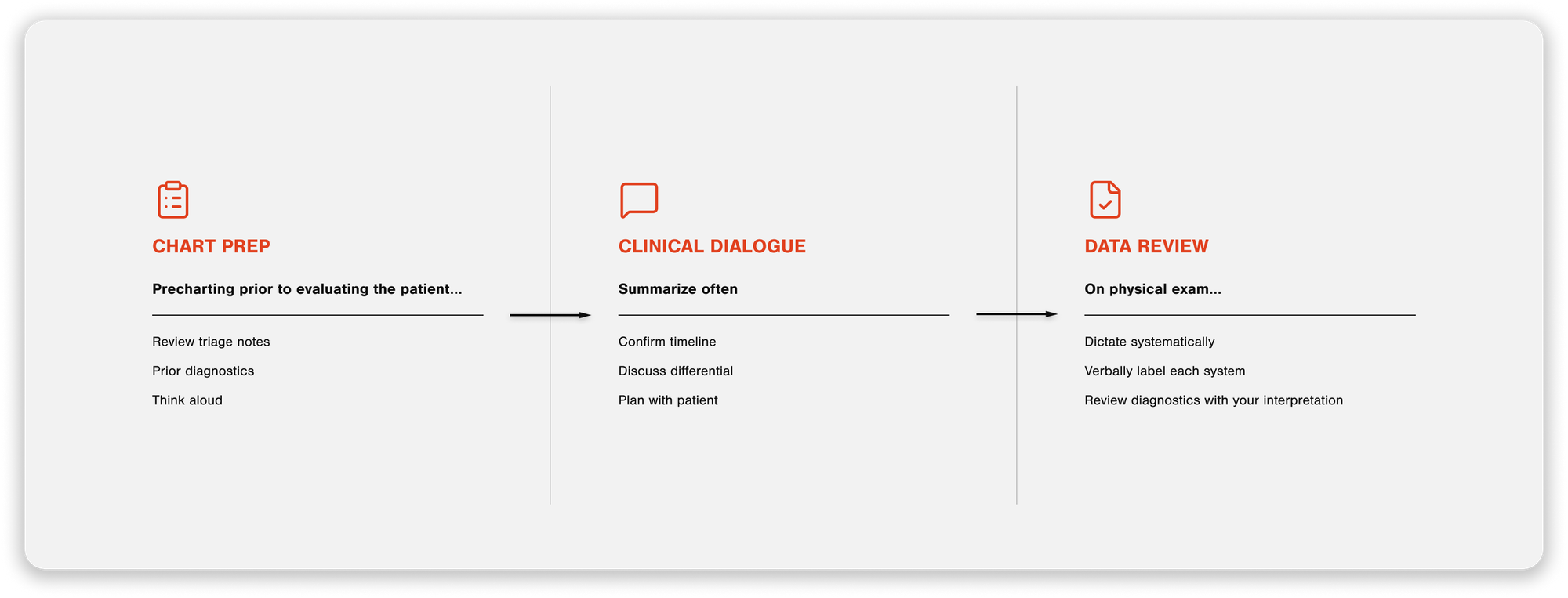

The Three-Phase Workflow

I've realized that using an AI scribe effectively isn't about changing how I practice medicine; it's about being more intentional with how I communicate what I'm already doing. The workflow I've developed maps to how we naturally move through ED encounters, with specific verbal anchoring strategies at each phase.

Phase 1: Chart Prep

This is when I'm reviewing the chart before seeing the patient: triage notes, vitals, recent visits, outside records, prior diagnostics. The challenge here is that the AI doesn't know I'm looking at past information unless I tell it. Without verbal anchoring, this pre-work gets incorrectly placed into the HPI or Assessment.

I start my recording with an explicit verbal label:

"Precharting prior to evaluating the patient...they're here today with abdominal pain..."

Then I think aloud as I review:

"On review of the patient's labs that were done outpatient yesterday, I see their hemoglobin was 8.2..."

"Looking at their recent ED visit from three days ago for the same complaint..."

This creates a clear temporal boundary. The AI learns this is background information, not part of today's encounter.

Phase 2: Clinical Dialogue

This is the face-to-face encounter with the patient. The AI only hears the conversation; it doesn't see the patient nodding, pointing to where it hurts, or any non-verbal communication in the room.

Consent first - I don't waffle on this:

"My phone is going to take notes while we talk."

That's it. Direct, clear, move on.

Summarize often, especially temporal information.

This has become one of my most potent techniques. When a patient gives me timeline information, I explicitly summarize it back:

"So your headache started suddenly at 2 pm while you were at rest, peaked immediately at 8/10, and has remained constant since then - is that right?"

"Let me make sure I have this correct: you took your first dose of ibuprofen at 6 am, the second at noon, and you're still having pain now at 3 pm?"

This level of temporal specificity is valuable for medicolegal purposes. Was the headache "maximal at onset" (concerning for SAH) or "gradual over hours" (more consistent with migraine)? Did the chest pain start "at 2 pm at rest" versus "after climbing stairs"? The AI reliably captures these nuances when I verbally confirm them. It's vastly better than reconstructing timelines from memory when charting later.

Discuss your differential diagnosis in depth.

This serves dual purposes: it's excellent patient communication and creates robust MDM documentation.

I talk through:

- What I think is most likely

- What else could it be

- What testing do I get, and why

- What I'll do based on the results

When you think aloud about your clinical reasoning with the patient, the AI captures it naturally. You're simultaneously educating your patient, involving them in shared decision-making, and documenting comprehensively. No need to recreate this thinking later when you're charting.

Phase 3: Documentation Review

Right when I walk out of the room, I dictate my exam

I know it feels awkward doing this in front of patients, so I do it immediately after leaving the room while everything is fresh.

I start with: "On physical exam..."

Then systematically go through my findings, labeling each system:

"General: Alert, well-appearing, no acute distress"

"HEENT: Normocephalic, atraumatic, pupils equal and reactive, oropharynx clear"

"Cardiovascular: Regular rate and rhythm, no murmurs"

I picture my documentation template in my mind and narrate what I found.

Back at the computer - reviewing diagnostics.

When labs, imaging, or other results come back, I provide context:

"On my review of the patient's CBC..."

"Looking at the chest X-ray..."

The explicit "On physical exam..." marker tells the AI exactly where this information belongs in the note structure. Contextualizing diagnostic review prevents confusion about when results were obtained or reviewed.

Critical Moments for Verbal Anchoring

Not every moment in an encounter requires the same level of verbal anchoring. Through my first few shifts, I've identified specific situations where being explicit with your communication becomes crucial. Miss these moments, and you'll end up with documentation that's inaccurate or disorganized.

When Reviewing Prior Data vs Current Encounter

This is where I see the most consistent errors. The AI has no way to distinguish between past and present unless you tell it.

The problem: You're looking at labs from an outpatient visit last week while precharting. You think aloud: "Creatinine is elevated at 1.8." Without context, that information can end up in today's assessment or woven into the HPI as if it's a new finding.

The solution: Create explicit temporal boundaries:

- "On review of outpatient labs from last Tuesday, creatinine was 1.8"

- "Looking at their ED visit from three days ago, they received morphine and Zofran."

- "The referral note from their primary care doctor states they've had symptoms for two weeks."

This separates background information from the clinical encounter today. The AI learns what the historical context is versus the current presentation.

During Temporal Sequencing

When patients describe how their symptoms evolved, temporal precision matters clinically and medicolegally. This is where summarizing becomes essential.

The problem: Patients don't naturally tell stories in perfect chronological order. They meander, circle back, remember details out of sequence. If you just let the conversation flow without anchoring, you lose precision about when things happened.

The solution: Explicitly summarize the timeline back to the patient:

- "So your chest pain started at 2 pm today while you were sitting at rest, peaked immediately at 8/10, and has stayed constant - is that correct?"

- "Let me make sure I understand: the headache began gradually yesterday morning, got worse overnight, and this morning you started vomiting?"

- "You took ibuprofen at 6 am, then again at noon, and you're still having pain now at 3 pm?"

The AI reliably captures this confirmed sequence. You're simultaneously verifying the patient's history and creating precise documentation. The difference between "sudden onset maximal headache" and "gradually worsening headache over 24 hours" is clinically significant. Getting that right matters.

System Transitions

Moving from one part of the encounter to another creates ambiguity for the AI if you don't mark the transition.

The problem: You walk out of the patient's room and immediately start dictating exam findings as you walk down the hallway. The AI doesn't know you've transitioned from patient dialogue to physical exam documentation. Or you're in the middle of documenting and get interrupted by a nurse with a question about another patient - the AI doesn't know that conversation isn't part of the current note.

The solution: Use explicit verbal markers for transitions:

- "On physical exam..." (before dictating exam findings)

- "Back at the computer, reviewing diagnostics..." (when you're looking at results)

- "Returning to the patient to discuss the plan..." (if you go back in)

Think of these as chapter headings. They tell the AI where information belongs in the note structure.

When Things Get Complicated

Some situations naturally create more opportunity for confusion:

- Multiple problems being addressed—use clear transitions: "Now discussing their second complaint of..."

- Interruptions during documentation–pause the recording or explicitly say "Answering a question about another patient" then "Returning to documentation for [patient name]"

- Complex medical histories–when discussing multiple prior surgeries, hospitalizations, or treatments, anchor each to a timeframe.

The pattern is consistent: The AI needs you to explicitly state context that would be obvious to a human observer.

When you're heads-down in a shift, it's easy to forget these anchoring moments. But they become a habit quickly. Now I find myself naturally thinking, "I'm transitioning - I need to say that out loud." It takes a few shifts to internalize, but once you do, it becomes automatic.

Practical Implementation

Managing Your Phone Through a Shift

Using your phone as a recording device for all shifts creates some logistical challenges. Here's what I've learned:

Current solution: Chest pocket. I keep my phone in the chest pocket of my jacket. It's easily accessible, and the audio quality has been good. The downside is the constant in-and-out when I get calls on my work phone or need to pause recordings between patients.

Testing next: Crossbody phone strap. I've ordered a crossbody strap to keep my phone secured at my side. The goal is to reduce fumbling—especially those moments when I'm trying to pause a recording, take out my phone, and then answer my work phone all at once. I'll report back on how this works.

Battery management: Recording all shift drains your battery faster than typical use. I carry a high-capacity Anker fast charger. It's been essential at the end of shifts.

Settings & Templates

Abridge Settings

Abridge has made this relatively difficult to find. You have to right-click an open note to open "Linked Evidence." This is where you will access the AI settings and find the "library," which is where your templates live.

You will want to explore the AI settings, which let you fine-tune the AI's output. Do you want it to be more concise and bulleted? Or do you want it to output more prose and be more narrative? You will adjust those settings here.

In the Library, you'll find physical exam templates. You can create multiple templates; I've made a "General Exam" and "TRAUMA" (non-trauma trauma – iykyk) template based on prior dot phrases I built in EPIC. You can choose how closely the AI adheres to what you say vs. what your template contains. If you want it to spit out the complete template regardless of what you dictate, you can choose that. Or if you want it only to output what you dictate, or a combination of the two, you can choose accordingly.

Spending time exploring, adjusting, and reassessing these settings will help you dial in the best output for your workflow and reduce the chart corrections you need to make on the back end.

Continuous Learning: The Insight Capture Tool

This technology is evolving rapidly. What works well today might work differently in a month. What feels clunky now might be refined in the next update. The key is capturing what you're learning as you go.

I built a simple web app for this: https://dulcet-kitten-e78a99.netlify.app/

How it works: Save the link as a bookmark to your phone's home screen.

The interface is straightforward:

- Optional reflection prompt - Tap "Get Random Prompt" if you want a nudge to think about something specific

- Categorize your insight - Select from four categories:

- Workflow Issue (process problems or inefficiencies)

- Tech Problem (tech bugs or failures)

- Design Opportunity (ideas for improvement)

- Safety Concern (patient safety implications)

- Capture the insight - Type or dictate into the text field

- Save it - Your insight is added to the list at the bottom

At the end of your shift (or whenever you have a moment), tap "Export All" to get a formatted summary that copies to your clipboard. Paste it into whatever notes app you use.

Why this matters: We're all learning this together. The patterns you notice might be different from what I've noticed. The bugs you encounter might reveal something about how the AI processes certain types of information. By systematically capturing these observations, we can:

- Identify what consistently works well

- Spot patterns in where the AI struggles

- Share practical strategies across the group

- Provide better feedback to the vendor about what needs improvement

The invitation: Use the tool. Share what you're learning. Let's maintain an ongoing dialogue about what's working and what needs refinement. This isn't a "here's the final answer" guide; it's a starting point. Your experience will shape how we collectively adapt to this technology.

Conclusion

I'm three shifts into using our AI scribe, and I've already experienced a fundamental change in how I practice. Less time spent documenting means more mental bandwidth for patient care and a greater willingness to see that extra patient at the end of the shift.

But the technology requires you to adapt your communication. The "scribe without eyesight" framework has been the key insight for me. Once I understood that constraint, everything else followed naturally.

This guide captures what I've learned so far, but the learning continues. The technology will evolve. The principles remain: be explicit about context, anchor temporal information, mark transitions clearly, and lean into the constraint.

Use the insight capture tool. Share what you discover. Your patterns might be different from mine. The more we learn collectively, the better we'll all get at this.

Start with the three-phase workflow. Pay attention to the critical moments. Be patient with yourself as you build new habits. And remember: you're not working around the AI's limitations. You're developing communication practices that make you a better physician.

Want a quick reference version of the post? Check it out here and bookmark it 👇🏼